Background task in Python Celery + Redis message broker

How to run background task in Python Celery? How to configure Python Celery to use Redis message broker?

This tutorial is not limited to any of the Python Frameworks. If you need to use Celery with Django, Flask or FastAPI, then you should be able to follow the steps here just fine.

Background Task

A background task allows you to handle long running process behind the scene. Common use cases are solving an HTTP Timeout error on web applications. Another one is building an asynchronous workflow between your applications. By handling long running processes at the back-end, your Client does not need to wait for the entire process to finish, allowing your system to respond spontaneously.

In Python, Celery project is a popular tool to manage background task. The library is simple to integrate so you can get started quickly and yet robust enough to handle complex workflows. It also supports scheduling if you need to run a task periodically.

Background task in Python Celery Application

For this tutorial I am going to install Celery in a Python virtual environment. Also, you will need Python 3.7 or later on your machine. The commands I used here should work both in Linux or MacOS.

mkdir -p celery_app/celery_app cd celery_app python3 -m venv . source bin/active pip install celery

This project file structure as an example, has all the files we will need to integrate with Celery. In a real world setup, the celery_app will be a package in your application.

- celery_app

- bin

- celery_app

- app.py

- celeryconfig.py

- tasks.py

- __init__.py

app.py – This file has a Celery instance and will serve as an entry point when a Celery worker(s) starts.

from celery import Celery

celery_app: Celery = Celery("worker")

celery_app.config_from_object("celery_app.celeryconfig")

@celery_app.task

def hello(name: str) -> str:

return f"Hello {name}"celeryconfig.py – This will serve as the Celery configuration module. In here I have defined the message broker to receive the job queue. The result back-end to store result after the job has been processed. Also, I used imports to organize the task handlers in separate modules of the application. For more Celery configuration options, please see the documentation.

broker_url: str = "redis://127.0.0.1:6379/0"

result_backend: str = "redis://127.0.0.1:6379/0"

task_default_queue: str = "Project-Celery-App"

imports: tuple = (

"celery_app.tasks",

)tasks.py – Using the instance of Celery (celery_app in app.py) as a decorator, you can easily make a task callable. Think of this Python file as a module in your application and it has functions that you need to call to perform a specific task. In this case, that task will be sent to a message broker (defined in broker_url setting) as a job in the queue.

from celery_app.app import celery_app

@celery_app.task

def another_task(name: str) -> str:

return f"Hello {name}"In addition, to show you how the tasks function will be registered later on when the Celery application starts, I have defined a default function in the main app.py. So in this example, you should see both hello and another_task as recognized task handlers.

@celery_app.task

def hello(name: str) -> str:

return f"Hello {name}"Message Broker

Another requirement for a Python Celery application is a message broker. The task is queued in the message broker and then consumed by the Celery worker(s).

Celery does support several message brokers and here I am going to discuss the integration with Redis.

Redis Message Broker

Install the redis-py client library for Python, so that Celery can talk to our Redis server.

In here I am running my Redis server through Docker container, using the official redis Docker image.

docker run --rm --name redis-broker -p 6379:6379 -d redis

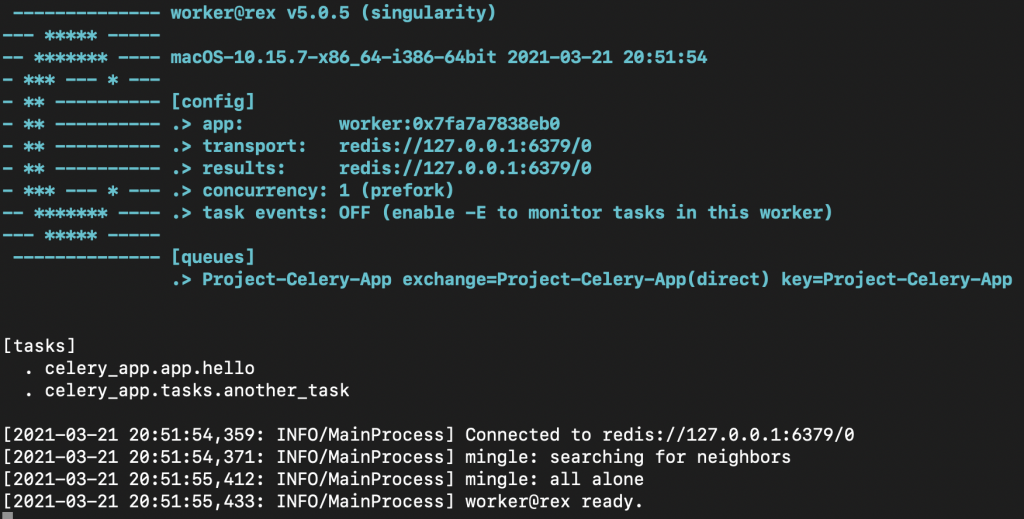

When you start your Celery application and you have everything set up properly, you should see something similar to the output below.

celery -A celery_app.app worker -n worker@%n -l INFO -c 1

Notice that Celery application registered the two tasks function upon start up. These functions can now be called to send background task.

Sending background task and retrieving output in Celery

This process is done easily by calling two methods, delay and get. But as I have mentioned earlier, Celery has more advanced features for running a chain of tasks, grouping several tasks and a chord task that depends on the result of another task.

from celery_app.app import hello

result = hello.delay("Rex")

result.get() # will output 'Hello Rex'Take note that the call to get method will wait for the background task to finish in order to retrieve the output. Thus, the process is back to being synchronous again. So be mindful in retrieving the result in Celery.

In this topic, we have learned how to setup a Python Celery application. We have discussed some of the basic Celery configurations and How to use Redis as message broker.

For use of Amazon Simple Queuing Service (SQS) as message broker, please follow my next tutorial.